Oral exams have several advantages over written exams, including flexibility to meet individual student needs, reduced time pressure, and fairness. They allow students to express knowledge differently, clarify misunderstandings immediately, and make cheating difficult. Oral exams also train students to engage in technical discussions, requiring clear, precise expression and explanation of concepts using correct terminology. However, due to limited practice opportunities, preparing for oral exams can be challenging, and the unfamiliar format may cause anxiety.

To address this, we have proposed a dialogue model that simulates realistic conversations with an examiner to practice the required skills. It aims to generate context-relevant questions covering exam topics, understand and accurately assess student responses, and incorporate these into subsequent questions, ultimately providing constructive feedback on overall performance. The interaction occurs in natural, spoken language. According to research, Dialogue-based Tutoring Systems have been successfully used in educational programs for Socratic dialogues, aiming to deepen knowledge through questioning and investigation. This leads to the research question: How can a conversational exam between students and examiners be modelled and implemented? To answer this question, the investigation focuses on several key aspects. It explores how oral exams can be structured practically, including the methodologies for identifying, selecting, or generating appropriate questions and their corresponding answers.

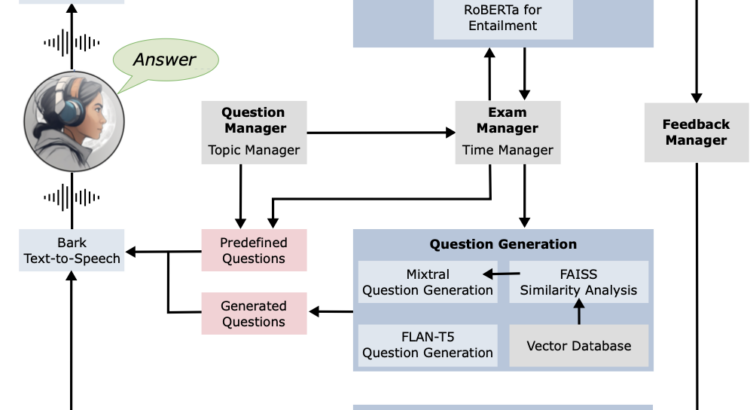

Additionally, it examines the process of comparing student responses with correct solutions to evaluate their performance. Finally, it considers how constructive feedback can be generated based on the analysis of an exam conversation. To implement the dialogue system, an architecture based on various components must be defined. The concept involves integrating and using pre-trained language models and providing educational materials as data for the system. Validating and critically assessing the system requires suitable metrics and evaluation techniques, which pose a challenge and must be tailored to the specific system.

Citatuion: Niels Seidel and Luna Hammesfahr. 2024. A Speech-Based Dialogue System for Training Oral Examinations. In Technology Enhanced Learning for Inclusive and Equitable Quality Education: 19th European Conference on Technology Enhanced Learning, EC-TEL 2024, Krems, Austria, September 16–20, 2024, Proceedings, Part II. Springer-Verlag, Berlin, Heidelberg, 254–259. https://doi.org/10.1007/978-3-031-72312-4_36